Using components with known vulnerabilities

In this post, I talk about how using components in your technology with known vulnerabilities can really hurt you.

I don't know about you, but these days, when I browse to a website, I shove on Chrome developer tools, just to take a look at what I'm getting. Here's why.

Introduction

When you rock up at a website, especially one you don't really know or necessarily trust, there's no telling the risk you're taking. A responsible site owner will make every effort to ensure that you, as a client, will have a safe experience while exploring their site, be it through simple browsing, signing up, signing in, or making a cash purchase. It's important that a client is able to do these things securely. Of course it is.

As an attacker, you don't care about the great user experience. You care about flaws in it that might provide the opportunity to exploit a vulnerability.

So, when a site operator builds their system, there are a number of key components that are involved; a web server, an application server, or something that runs APIs or web services, a database server, a network and so on and so forth.

Some of these things are built, some of these things are bought and some of these things are borrowed. In this post, I'll talk about the risks associated with each and a variety of mitigations you can consider implementing.

Where do we start?

Built, bought and borrowed.

Here are some examples:

You build many of your web applications, be it using ASP.Net, PHP, Java and the like.

You buy your database, application or web servers, be they MSSQL, MySQL or IIS, Apache, NGINX respectively.

You borrow things like JavaScript frameworks, libraries and things like that. You don't pay for them and you don't build them. No, you freely consume them and integrate them into your overall solutions.

If you buy in a solution, you're possibly getting all of the above and completely out of your control. You also buy and borrow things from a cloud provider, where you then most likely build stuff.

In OWASP terms, this is generally covered by number nine in our chart. OWASP-A9 'Using Components with Known Vulnerabilities', hence the title of this post.

Recent case history

Now, you may reason that because it's number nine in the chart, it's a low end priority, but in my view you'd be wrong. For example, Equifax lost 143 million customer records a month or so back, mainly because they were operating an out of date version of the Apache Struts framework. That's OWASP-A9. What made that worse was that the vulnerabilities were well known and yet the organisation seemingly did nothing to mitigate the risks associated with them. They didn't patch.

To further illustrate that spot nine in the chart still makes it pretty critical, the OWASP Top 10 is refreshed infrequently and when it is, it contains the globally recognised killer problems in web application security. There are many MANY other vulnerabilities out there, but the Top 10 recognises the absolute whoppers.

So, OWASP-A9 is at the top table, along with injection, cross-site scripting, knackered authentication and the rest.

There have been many preceding and more recent examples of what happened to Equifax and that will continue to be the case, for as long as organisations don't keep their things up to date.

My own experience

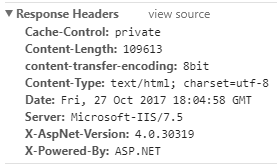

Right, going back to my earlier point about using Chrome's developer tools whenever I visit a new site. One of the reasons I do this is to inspect the headers that get returned and the content that gets served. Pressing trusty F12 before you load the site gives you a wealth of detail about what's going on. Here are a couple of examples:

The above image shows a couple of interesting things; things that should be of interest to an operations bod, but that would definitely be of interest to an attacker:

- The host is running an out of date version of a web server (in this case Microsoft IIS7.5, likely running on Windows Server 2008 R2)

- An older, unsupported version of the ASP.Net framework is in use (in this case 4.0, where at the time of writing, the current version is 4.7.1)

- No security headers are implemented

I cover headers in another post, but what about the other two points?

The fact that the website is disclosing quite sensitive information is a concern in itself. The information itself is a potential gold mine to an attacker.

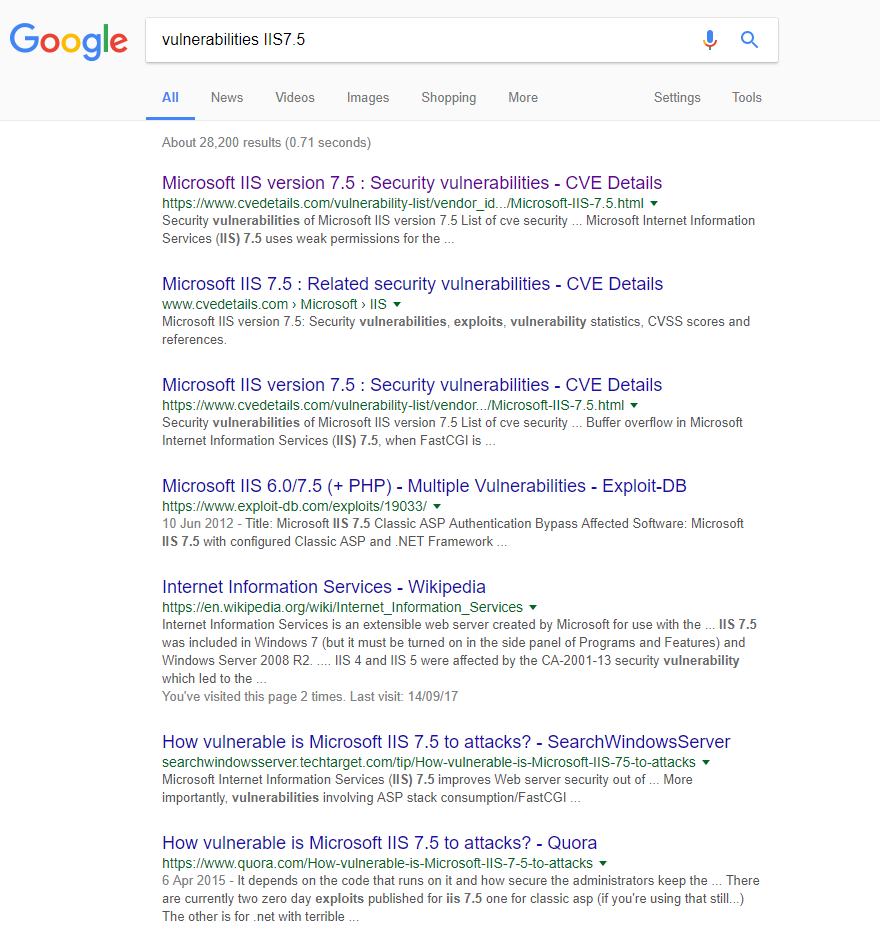

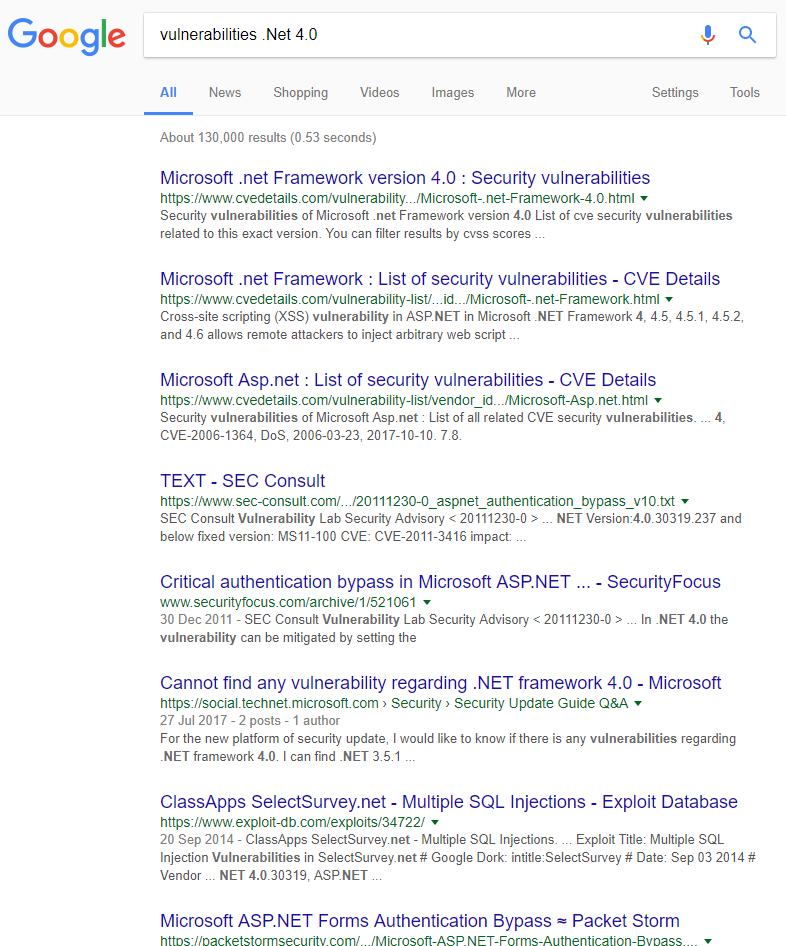

So, we know an older version of IIS is supported, as is an older version of ASP.Net. As we know this, we can go off and make some enquiries around known vulnerabilities in either or both:

Or...

As you can see, it's pretty damn easy to identify the technology stack in use by a website and equally easy to go and find out ways to exploit known vulnerabilities. This is known as 'fingerprinting'.

Obfuscating (masking) or faking this information makes it potentially confusing and therefore less attractive to an attacker and the more opportunistic types may go elsewhere. Updating the components in use make them more difficult to exploit, whether you're leaking this information or not. Up to date and obfuscated in the response headers make things even harder for an attacker to easily launch an attack against your systems, based on what they try to fingerprint.

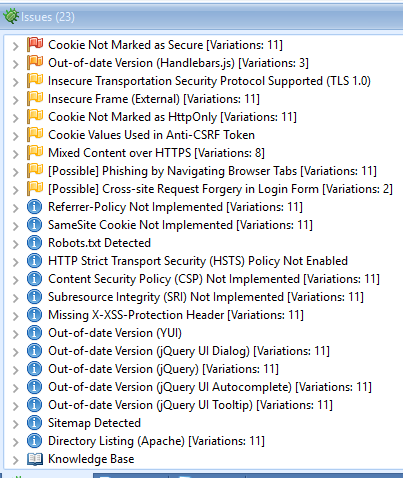

OK, that covers off the built and bought parts of your stack. What about the borrowed? Take a look at this:

Ignoring everything else, check out the obsolete versions of Handlebars, jQuery and YUI. So, these are components your developers consume from package managers or CDNs. They have no control over the underlying quality of them and more often than not, they have no processes that they follow, to ensure they're kept up to date. And they go stale really quickly.

As they age, they start to stink, in other words they become vulnerable to exploits. An example of this is that earlier versions of jQuery contained XSS vulnerabilities, but as a developer, you wouldn't necessarily know this, because at the time the particular feature was delivered, everything was fine. Same with Handlebars. unless you keep these libraries or frameworks reasonably up to date, you have attack surfaces in your applications that you have absolutely no control over. This isn't a good place to be.

A well executed XSS attack might whisk an unsuspecting user somewhere they don't want to be, such as a site that looks like the one they intended to visit, but subtly isn't. Then they're in trouble.

A dodgy vulnerability on an ancient web server might allow an attacker to take control of it and worse - other servers it integrates with, such as database servers. Once they have that, they might well find it relatively easy to compromise data. And then you're in deep shit.

What can we do?

I'm seeing an increase in comments on Twitter from people saying "patching is hard!". I think these mostly come from SysAdmins / Ops folk. I kinda get their point, especially if you've got myriad systems, services, platforms etc to worry about and there's also a horrible spread of technology in play.

That said, you need to take a risk based approach to all this. If your systems handle personal data, payment card data and the like, then they get prioritised above all else (see GDPR and PCI DSS compliance), as far as I'm concerned.

On that basis, you make decisions around the risks of that data being compromised in some way and the potential consequences of that happening. In GDPR and PCI DSS terms, it could be particularly painful, to say the least.

Then, you weigh up the difference between the costs involved in keeping your systems up to date versus the material (and human) costs involved in neglecting to do so.

So, a set of conversations are needed between those that deliver and operate technology and those directly affected when it all goes horribly wrong, i.e. 'the business'.

"Can we live with the consequences of not doing this? Yes or no"

If the answer is "Yes" then you've been presented with the full picture or risk profile and can accept that risk in an informed and mature way.

If the answer is "No" then you patch the shit out of your things, no matter the resourcing it requires.

Conclusion

BadRabbit, Equifax or WannaCry. If you were a victim of any of these events, then you have the use of components with known vulnerabilities to attribute it to.

If your position is that "patching is hard", then you need to get that sentiment in front of the CEO, who may well be the person who loses everything dear to them, including their business, their staff and reputation, should it all go to hell.

If you operate systems, make sure you run the most stable and secure versions of the stuff developers don't have any responsibility for.

If you develop software, be mindful of pulling in libraries or other components that might bite you on the arse, post-delivery.

If you operate a network that allows all of the above to move smoothly, make certain your hardware / firmware / software is nice and safe.

It's common sense.

Thanks for reading. I really appreciate it.